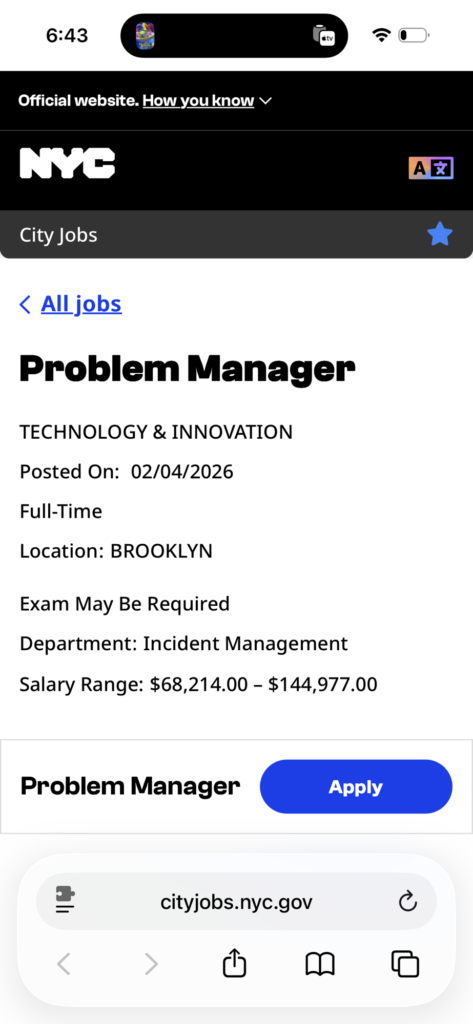

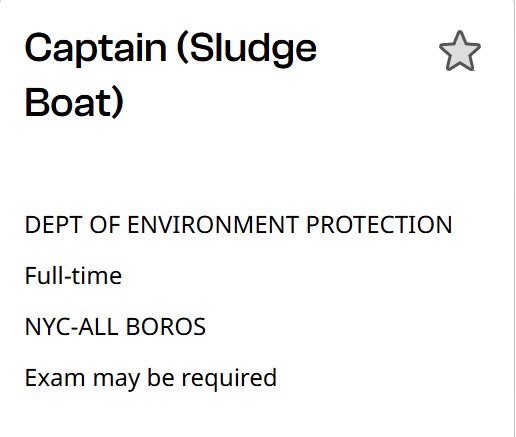

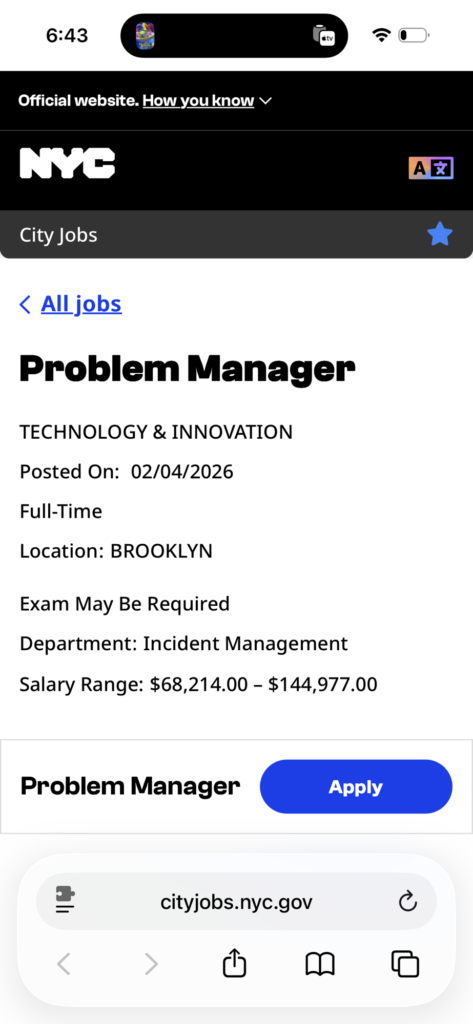

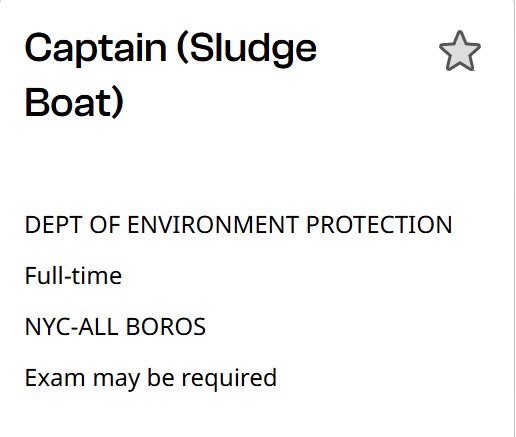

The city of New York dropped a ton of new openings today

https://cityjobs.nyc.gov

Here’s a couple of my favorites

The city of New York dropped a ton of new openings today

https://cityjobs.nyc.gov

Here’s a couple of my favorites

Social Media algorithms use many signals to choose winners (going viral) and losers (most of us). Every now and then you stumble upon one which really matters. I just found out that when a big account blocks you it’s a HUGE signal to the algo not to show your stuff.

Recently Dare Obasanjo was being his typical man child self and I called him out on his childish behavior. He then blocked me as is his right (coward).

Dare: Google declares it won’t have ads in Gemini!

Me: They put ads right next to the AI overview on the Search page

Dare: link to article reiterating what Google said

Me: It’s the same AI

Dare: Take the L and move on

Me: Obnoxious as always I see. I remember this when we were at Microsoft too. Grow up.

I really am surprised he hasn’t matured in his online behavior in all those years.

I’ve never been a fan of Google. I always thought “Don’t be evil” was naïve at best, and a lie and attempt to reputation wash at worst.

I was vindicated when they removed it after building military robots. They do evil things all the time, including shutting down people’s accounts with no recourse. Not fixing bugs in Android like when I texted “I love you” to my then girlfriend, it went to my BOSS who was quite confused. Found the bug online with “Won’t Fix” as the status.

So when Google says they won’t put ads on their AI, when they clearly already are, I take issue.

Unfortunately the block by such a large account put a severe distribution penalty on my Threads account. So I’m in distribution jail until the data used for that flag falls out of retention.

In the 1970s a researcher named Bruce Alexander was researching addiction using rats.

Rats in lab cages were given a choice of plain water or water laced with morphine. They chose the morphine like all the time. Many overdosed.

Then he built “Rat Park”. A 200x larger play space with food, play toys, other rats to play with.

They were given the same choice in water. Most chose plain water, none overdosed. He forced a set to get addicted, but in Rat Park they chose to go through withdrawal.

I think about this study from time to time when it seems like my alcohol intake is a bit high or I start smoking, etc.

Sources:

https://en.wikipedia.org/wiki/Rat_Park

https://www.youtube.com/watch?v=PY9DcIMGxMs

Originally posted on Threads: https://www.threads.com/@jimfwallace/post/DQu5b82ATb5

Special thanks to all the brands who sent me birthday wishes 💕 ❤️ 💞 🥰 💙 ♥️ 😍♥️❤️💙🥰

I could stop the bitcoin sell off simply by taking a short position… but I won’t.

Your task management just got a conversational upgrade.

What if managing your todos felt less like clicking through an app and more like talking to a helpful assistant? That’s exactly what Taskleef’s new MCP Server makes possible.

With Anthropic’s recent launch of Cowork plugins—and the growing ecosystem of MCP (Model Context Protocol) integrations—we’re excited to announce that Taskleef now integrates directly with Claude Code and Claude Cowork.

Instead of switching between apps, opening browsers, or remembering which board has what task, you can now manage everything through conversation:

Natural language in, task management out.

Taskleef’s MCP Server speaks the same language as Claude—literally. The Model Context Protocol is the open standard that connects AI assistants to external tools securely. When you configure Claude with Taskleef’s MCP server, Claude gains access to 39 different tools for managing your todos, boards, tags, and more.

Here’s the magic: you don’t need to learn these tools. Just describe what you want. Claude figures out which tools to call and handles the rest.

Todo Management Create, complete, search, and organize your tasks. Taskleef understands natural dates like “tomorrow,” “next Monday,” or “in 3 days”—and even extracts tags automatically when you write something like “Review design [work].”

Kanban Boards Move cards between columns, reorder priorities, and manage WIP limits—all through conversation.

Collaboration Add comments, manage board members, and control access with Editor, Viewer, and Owner roles.

Smart Organization Tag your tasks, filter by status, and keep your inbox clean—without manual sorting.

Setting up Taskleef with Claude takes just a few steps:

Full setup instructions are in our MCP Server documentation.

Anthropic’s knowledge-work-plugins repository shows where this is headed: AI assistants that integrate with your actual tools, not just chat about them. The 11 official plugins—covering sales, legal, marketing, customer support, and more—all rely on MCP connections to work.

Taskleef’s MCP server means you’re not locked into someone else’s productivity system. You get the benefits of AI-powered task management with a tool that’s designed around how you actually work.

This is just the beginning. We’re exploring deeper integrations with the Cowork plugin ecosystem, including potential skill bundles that combine Taskleef with calendar management, email triage, and project workflows.

For now, we’re focused on making the core experience seamless. Try it out, let us know what workflows you want to automate, and help us shape what comes next.

Ready to manage your todos with AI?

Using only economic theory, explain why the best economists in the world are not all billionaires

Tags System Overhaul

Task Management

File Attachments

Real-Time Notifications

Authentication & Security

User Experience Improvements

AI Features

Bug Fixes

Check it out: https://taskleef.com

What would you do with AI that was 2x as capable as it is today?

4x?

16x?

Eventually the answer is “everything”

That’s the trajectory we’re currently on

What’s up with companies of a certain size thinking that it’s totally fine to expect customers to learn about their internal departmental structure?

I just had a brain dead exchange with a sales rep from Stripe. They asked if they could help me finish setting up my account since as of now I’m not generating any revenue for them.

I told them the issue was on their end, that they wouldn’t accept all the paperwork I’d already sent them to prove my LLC exists, that I am the sole owner, that it’s at the address it’s at.

When I mentioned this he sent back a canned template about how financial institutions are heavily regulated (no shit) and need to adhere to Know Your Customer and Anti-Money Laundering regulations.

I replied, so is my bank who opened my account without a problem but you won’t accept the bank statement with my address that matches my government issued ID.

I told him that he has more power and access than I do to suggest a change in policy, which is why I was telling him all of this. Oh and he reached out to me.

He wrote back that he can’t suggest a change in policy, reiterated that he’s on the sales team and that I needed to talk to another department.

Wild. Like I get that you don’t want to bother because it’s not worth your time, but that’s different from saying you can’t “because you’re on the sales team” wtf? The policy is literally stopping you from making sales. In my experience the ONLY people the executives listen to are the sales people.

I think that if companies are this big that people feel like they have such a narrowly defined role they can’t do anything then it’s time for them to be broken up into smaller companies. My legal test for this would be 1 hop. If you can’t get the issue resolved in 1 hop from intake, meaning having the front line person get you to the right place in a single transfer, then your company is too big.